Developer Portal

Developer PortalStep 3: Process

The Process step leverages AI models trained on your labeled data to automatically classify the remaining entities in your building.

- Train: AI models use provided Labeled Entities

- Infer: Labeling is automated for the rest of the connector's data with predictions

- Results: Finally, Expert Center generates confidence scores for each prediction

Understanding Inferred Entities

Inferred Entities are Source Entities that have been automatically classified by the AI model. The model learns from Labeled Entities to predict:

- Type classifications for Source Entities

- Associated Derived Entities

- Relationships between Source Entity (aka SELF) and specified Derived Entities

For example, if you labeled AHU-01_SAT as a "Supply Air Temperature Sensor," the model can infer that AHU-02_SAT is likely also a "Supply Air Temperature Sensor" and belongs to "AHU-02."

Each Inferred Entity receives a confidence score indicating the model's certainty in its prediction.

Prerequisites

Before you begin processing, ensure that the examples your team labeled cover major equipment types and that initial assignments have been completed.

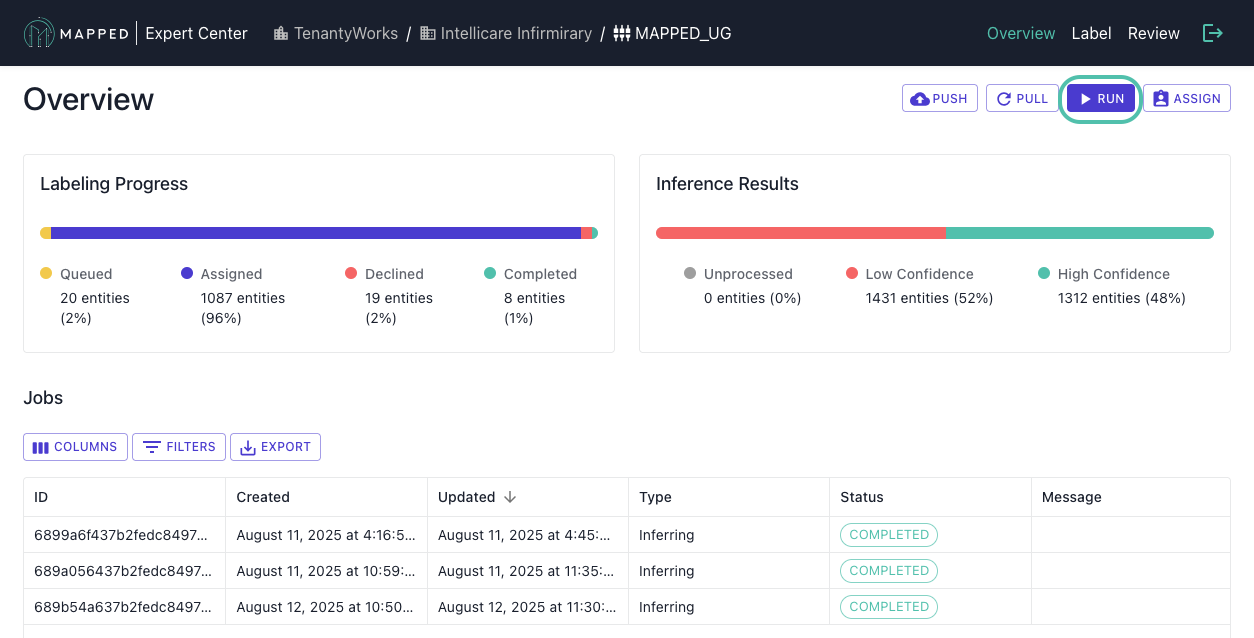

How to Run

- Navigate to Connector Overview

- Click Run

- Monitor inference progress in the Jobs table (from Connector Overview)

Expected duration: 30-90 minutes depending on volume

Understanding the Process

What Happens During Inference

- Pattern Recognition: Identifies naming conventions and relationships to infer entities

- Enrichment: Applies learned patterns to unlabeled entities to classify, derive entities, and derive relations

- Confidence Scoring: Assigns certainty levels to predictions

Monitoring Progress

Jobs Table

While you monitor inference, you can view the status:

- Running: Currently in progress

- Completed: Successfully finished

- Failed: Error occurred

Inference Results

Once complete, results include Confidence Levels.

- High Confidence (>95%): High certainty for specific Inferred Entity

- Medium Confidence (>80%): Moderate certainty for this Inferred Entity

- Low Confidence (<80%): Inferred Entity most likely requires oversight/correction by domain expert

Optimizing Inference Quality

Multiple Iterations

Don't expect perfection on the first run. Instead, run initial inferences, then review results and any labeling gaps. After labeling entities in the queue, you should re-run for improved accuracy.

Troubleshooting

Poor Accuracy

To address poor accuracy, label more entities in the queue. The inference process already adds low confidence output to the queue. Then, review labeling consistency and check for naming pattern variations.

Long Processing Times

For large connectors with more than 10,000 entities, it's normal if processing times may exceed the expected 30-90 minutes. You can check system status for delays.

Interpreting Results

- Progress: Percentage of High Confident Inferred Entities

Completion Indicators

You'll know Processing is complete when:

- ✓ Inference Job shows Completed status

- ✓ Inference Results bar appears in Connector Overview

- ✓ Inference Results bar shows distribution across confidence levels

⚠️Warning signs:

- If the job status shows Failed, check any error messages and retry

- If there's a very low percentage of high confidence results, you should label more examples.

- If a job is running more than 3 hours, check system status or contact [email protected].

Next Step

With inference complete, proceed to Step 4: Review to validate predictions and ensure accuracy.