Developer Portal

Developer PortalStep 4: Review

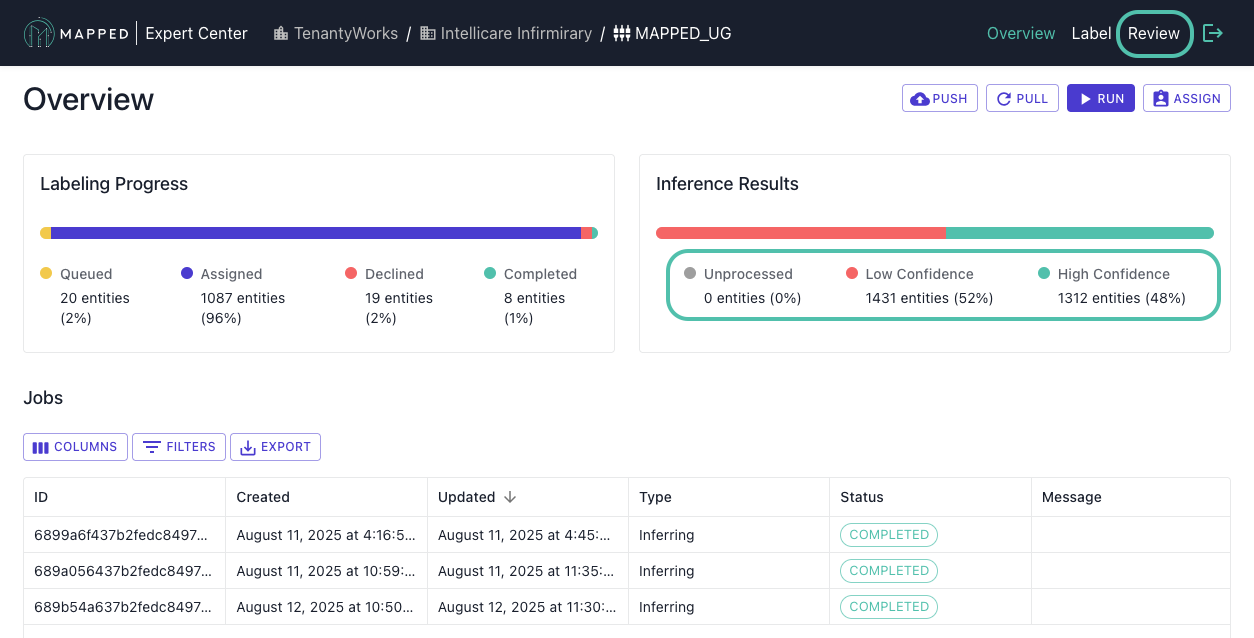

The Review step validates AI predictions through targeted human oversight, ensuring quality before proceeding. Review validates model predictions across confidence levels and identifies patterns requiring additional labeling, evaluating whether Labeled Entities match training data.

Interface Navigation

From Connector Overview:

To navigate to the Review tab, you can click confidence level bars in Inference Results for filtered views or select Review from the header menu for a complete overview.

Review Tabs:

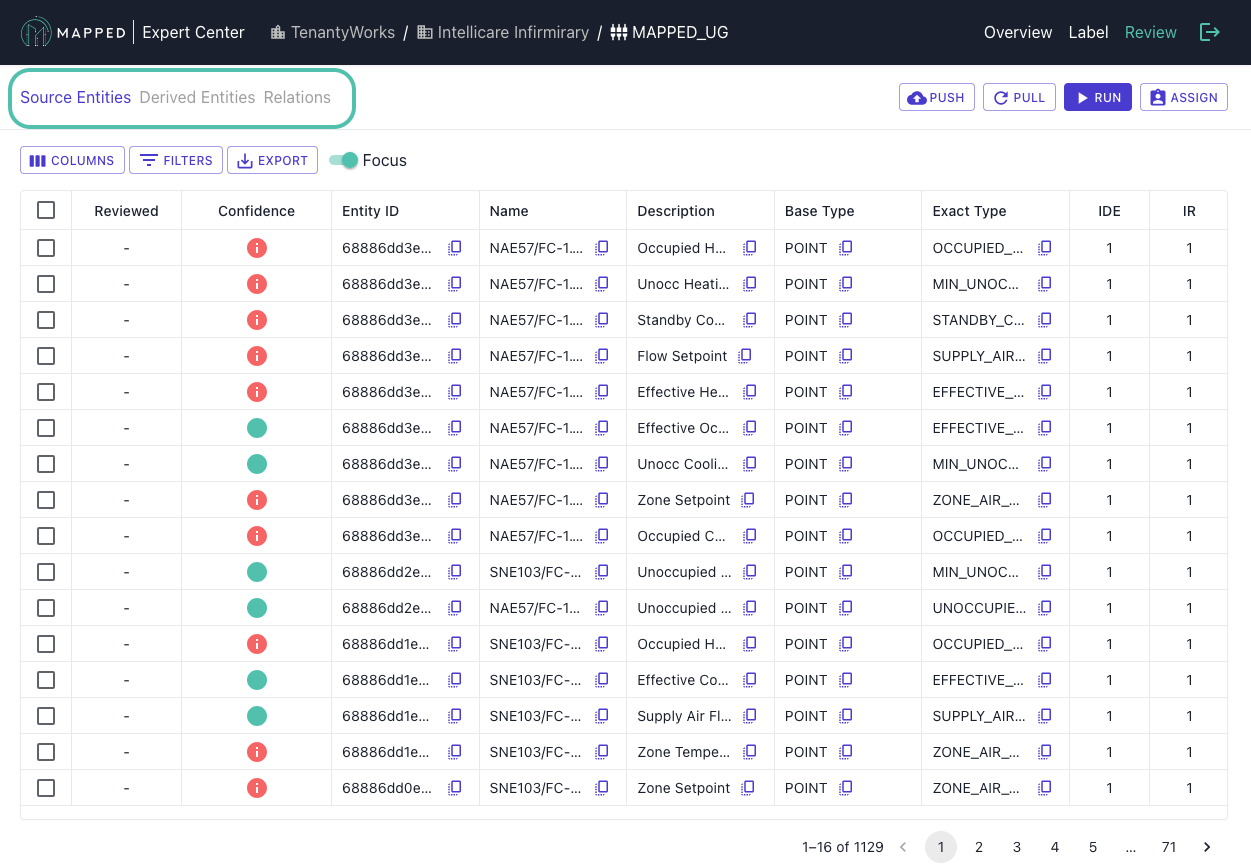

Once on the Review Tab, you can drill down further into entities and relations through the tabs on the left:

- Source Entities: Original entities with AI classification and enrichment

- Derived Entities: New entities inferred from Source Entity metadata

- Derived Relations: New relationships between entities from Source Entity metadata

Review Strategy

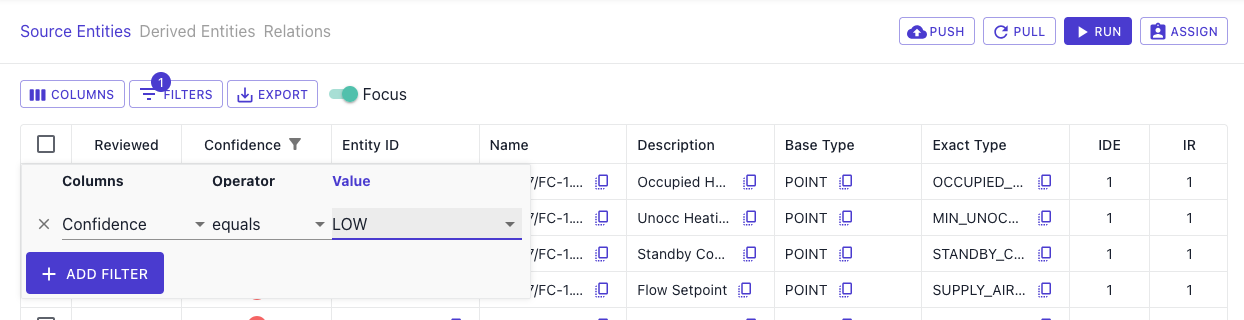

Start with Low Confidence (<80%)

These predictions indicate model uncertainty and guide future labeling priorities. To begin, filter by Low Confidence on the Review tab or select Low Confidence from the Inference Results bar in the Connector Overview.

You can approve any obvious correct predictions and take note of patterns for the next labeling round. Declined patterns are automatically added to the Labeling Queue.

2. Spot-Check High Confidence (>80%)

Even high-confidence predictions can contain errors from training data issues. To begin, filter by High Confidence on the Review tab or select High Confidence from the Inference Results bar in the Connector Overview.

Here you can sample high confidence results and decline any incorrect classifications. Any declined entities are automatically returned to the Labeling Queue.

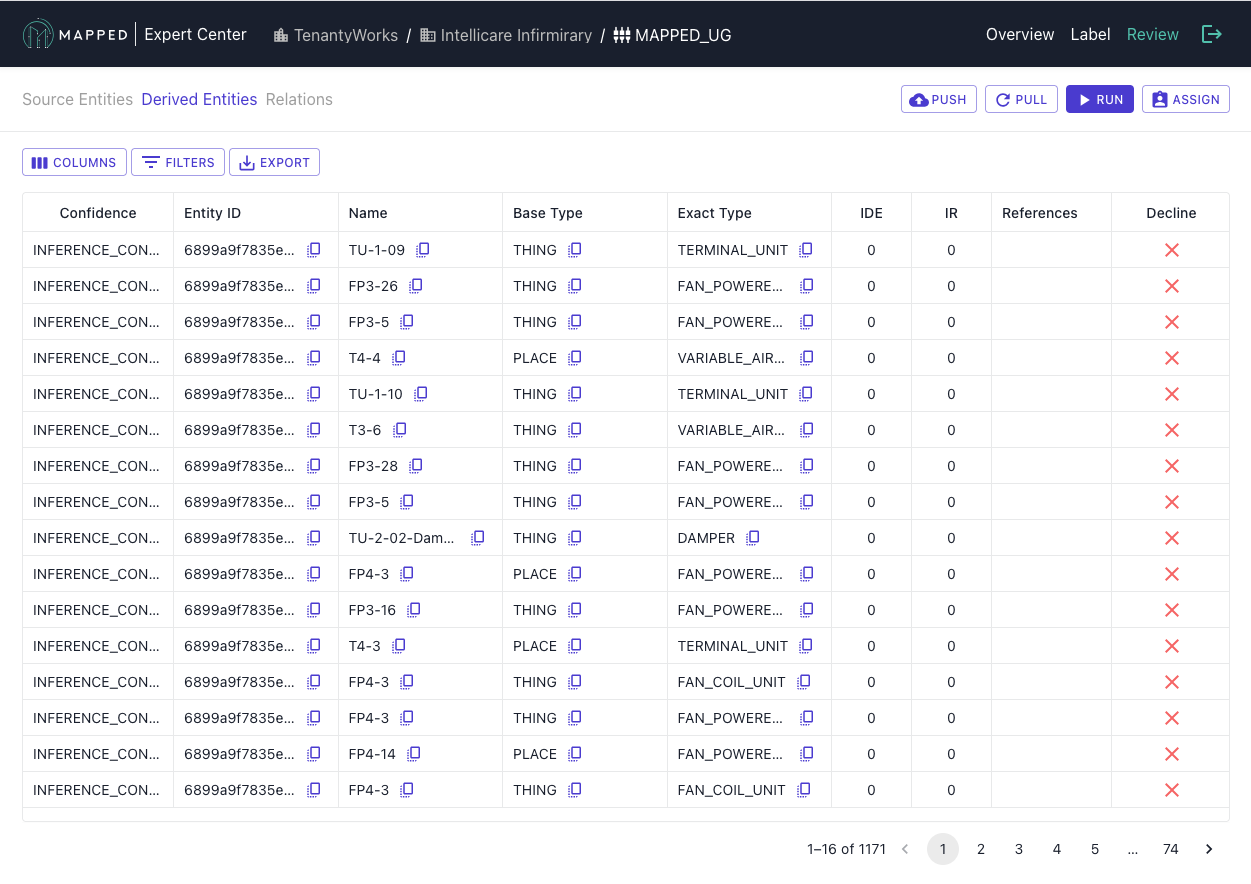

3. Validate Derived Entities

To review Derived Entities, switch to Derived Entities tab.

If you find an incorrect classification, you can decline an entire Derived Entity row. Note that declining affects all source entities using that derived entity.

Review Actions

| Action | Source Entities | Derived Entities |

|---|---|---|

| Approve ✓ | Confirms classification | Not Applicable |

| Decline ✗ | Returns to labeling queue | Rejects all dependent entities |

| Skip → | Applies automatic rules* | Not applicable |

*Automatic rules: Low confidence inferences are declined, while high confidence inferences are approved.

Consider re-running inference if quality targets aren't met, or new labeling patterns are identified.

Completion Indicators

You'll know Review is complete when:

- ✓ High confidence Source Entities reviewed

- ✓ Derived Entities have been reviewed

- ✓ No entities have needed to be updated since last inference

⚠️ Warning signs:

- If there's a high percentage of low confidence results, return to labeling more examples.

- If there are systematic errors in high confidence results, check labeling consistency.

- If there are declined derived entities, review the labeling approach for derived entities.

Next Step

After achieving quality targets, proceed to Step 5: Unify to merge entities across connectors.